Devs and the Culture of Tech - Part 2

The Power of Simplifying Reality

This piece is from a series of essays/posts/rants about the tv show Devs and the ideology of tech. If you haven’t read it yet, I’d suggest starting with part 1. Or don’t. You do you.

In my previous post I ended by pointing to three key consequences of a technologically determinist mindset. Briefly, these were

The abstraction and simplification of reality in a way that obscures the politics and power afforded to those who get to do it.

The way that determinism allows the deflection and evasion of responsibility for new technologies, particularly from groups that actively work to bring them about.

The underlying anti-humanism of it all that positions humans as lesser than tech, and denigrates non-computational capacities like reason, emotion and intuition.

In this post we begin to look at these themes by examining the simplification of reality and the power it can afford.

Forest and the Multiverse

As we saw in the previous entry, Forest, CEO of Amaya and tech entrepreneur is desperate to prove that the determinist tramline exists - to prove that all of reality has unfurled along a singular thread of cause and effect. Quite why he is so fixated on this idea is not evident to the viewer in the early episodes, however we are shown the extent of Forest’s devotion to this assumption. When one of Forest’s Devs engineers, Lyndon, vastly improves the performance of their experimental predictive algorithm by supplanting Forests singular timeline model with a ‘many-worlds’ interpretation, Forest is incensed.

Many-worlds posits that every moment diverges into infinite possible worlds and, whilst we experience just one strand of this infinitely branching path, everything that could happen will happen. It is, they claim in the show, still a deterministic model as it still posits that all outcomes, all chains of cause and effect do happen, but it is no longer a singular inevitable timeline that can be traversed back and forth like a video progress bar. It is an infinitely branching tree of variations, probabilistic, complex and messy.

Much to everyone’s surprise, Lyndon is fired for ‘undermining’ the project. Despite Forest’s project leaping much closer to realisation, it challenges the assumption he is clinging onto, that reality is inherently deterministic. For Forest, choice must be an illusion, history could not have gone any other way. Only then can a system that projects backwards into that history guarantee a perfect reproduction.

Exactly why Forest is so dedicated to the tramline model is complex. It is on one level a matter of personal trauma and a need for absolution (something we’ll look at in another part of this series). However it is also a highly instrumentalist motivation. Forest has a goal - to be able to simulate perfectly any moment in time - and he has a mental model of reality that makes that theoretically possible.

This requirement to simulate perfectly is ultimately driven by Forest’s unspoken motivations (more on that later) but the given justification for why the Devs team must try to force reality to conform to Forests model, is an instrumental one - the entire animus of the project is to achieve a particular outcome, regardless of its feasibility or actual consequences.

When he admonishes Lyndon for introducing the many-worlds model into the algorithm it is for instrumental reasons, for the goals of the project. Many-worlds challenges Forest’s existing tramlines model and so challenges the feasibility of his goals. Forest’s outrage is unexpected because it appears like Lyndon has proven Forest’s goal is possible, so what is the problem?

Determinism and Mastery

The Devs project requires that the simulation be an exact reproduction, not a hair out of place, but a probabilistic model means that the outcome can never be certain, never fully determined. When psychologist Sherry Turkle1 talked to early internet users back in the 1980s, she argued that her interviews highlighted a deep need for mastery over something in the world. The computer did exactly as it was told, it was predictable, deterministic in its operation. For Turkle it was possible that if these experiences happened during key stages of someone’s development, their overall relation to computing, and the external world as a whole, may become one of mastery with an expectation of direct predictable control.

An expectation of an orderly, predictable deterministic and systematic reality. For Golumbia2, writing about Turkle’s work two decades later, it is this desire for mastery that drives the desire to render all things as computationally interpretable. If reality can be subsumed into the computer, then the person with mastery over the computer can feel they have mastery over reality3. Forest’s approach is to take a complex reality, instrumentalise his relationship with it, cut away what he deems as irrelevant or unnecessary for that goal and simplify it to make it manageable, and renderable into a computational model of thought. This is, say Malazita and Resetar4 in their work on computer science (CS) education, standard practice.

CS education operates under a particular ‘epistemic infrastructure’, which in simple terms means that it values certain ways of thinking about and engaging with the world. Students that best exemplify that way of thinking are ‘better’ students and more likely to find success within the field itself. What matters most within CS education is ‘abstraction’. This is the process of discarding irrelevant information and trimming down a phenomena to the fundamental components necessary to be able to represent it computationally, to systematise it structurally and ultimately achieve your goals. Good computer scientists are good at abstraction, translating complex phenomena or problems in a way that makes them computationally parseable, logically valid and done in a way that gets to the essence of the thing itself, without extraneous information getting in the way.

Abstractions are of course absolutely necessary in many fields. When social scientists talk of concepts, or social constructs or theories, those are themselves abstractions, ideas you can grasp enough to help you understand some aspect of the phenomena it refers to. Abstraction is not unique to computer science, it is a fundamental component of rationalism and rationalism is itself foundational to modernity, bureaucracy and Neoliberal orthodoxy. Rationalism privileges logical abstracted and systematic reasoning above non-logical thinking like emotion, intuition or even sensory input (that stick in the pond may look bent but I can reason it is an illusion of refraction). Gottfried Leibniz is the exemplary rationalist and closely tied to the philosophical history of computing. Logic and the abstraction of the world into something that could be rendered into a formula of mathematically related symbols was, for Leibniz, the only valid form of cognition, with all other forms simply being failed cognition5.

The danger lies in forgetting that the abstraction is not the reality itself which O’Neill6 refers to as idealisation. If someone’s abstraction becomes idealised and reality fails to live up to it, it is seen as reality that is at fault rather than the abstraction. Abstractions are always partial representations of the thing itself, always fundamentally defective in that they cannot fully represent. Abstraction is always the process of separating the inseparable.

“[T]he rationalist, in a sense, believes his abstractions; that is, he forgets that they are partial views of reality and comes to think they have somehow captured its essence. His abstractions are thus turned into O’Neill’s idealizations, as he is led to deny, if not the very existence of the factors he is leaving out, then at least their relevance.”7

Later when Lyndon visits Stuart to ask for his help getting his job back, he points out that assuming many worlds worked far better than Forest’s assumed just one, the implication being that reality is many-worlds. Forest is wrong, and his insistence on a deterministic mindset is not only harming the project, it is out of line with reality. If Forest wants just one singular timeline, he needs to change the laws of reality. Forest, says Stuart, is a tech genius - “those laws are secondary”.

The Politics of Rationality

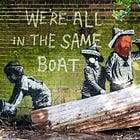

“They’re having the tech nerd’s wettest dream. The one that reduces everything to nothing”

Crucially, the process of abstraction is a process of judgement by individuals who are making qualitatively driven choices as to what matters and what does not. As such reality becomes simplified as only that which can be, and is judged necessary to include in a model of reality. This abstraction is then given greater validity because of the posture of rationality, as judgement becomes framed as calculation, a product of higher order cognition. It is what David Golumbia referred to as the ‘Political authority of computationalism’. Anyone that insists on bringing in factors outside of the abstraction, particularly social issues, is considered to be outside this frame of political authority - their politics are tarred as irrational, a failure to sufficiently abstract and therefore lacking political authority.

The apoliticism of the rational technocrat is not an absence of politics, but unexamined politics - the presumption that their position is a political default. Nowhere is this better expressed than in the current San Francisco movement of the Grey Tribe. Led by prominent Silicon Valley VC’s pushing concepts developed amongst ‘Rationalist’ subcultures, this particular worldview sees the people as falling into either Blue or Red Tribes (yes that maps directly to Democrats and Republicans) driven by political rather than rational animus. Of course the most technical and rational can rise above this and instead align with the Grey tribe. The Grey tribe proclaims themselves a separate third way that is not political, but rational, data driven and objective. It is the politics of the smug contrarian who declares that all of their ideas are not political, they are rational.

They are technocrats, seeing themselves as allegiant not to a political ideology but to technology and rationality itself. Peter Thiel’s proclamation that “we are in a deadly race between politics and technology”8 summarises this false distinction that will often hide extreme political goals and the privileges that are sought by pursuing them. The Grey tribe seek to claim parts of the city for their own benefit, to enclave it from the wider messy society they currently inhabit, and to establish their own privileged society which they can run as they see fit. Claims of being at most politically ‘moderate’ despite proclaiming plans to bribe police, purge the city of undesirables and judge officials on the basis of their allegiance to tech company interests9 demonstrates the absolute naivety at play when tech culture delineates what is and is not political.

Whilst Forest’s unsaid assumptions, preferences and privileges shape the implications of his Devs project, they are done so simultaneously from a position of objective, dispassionate distance. Forest’s personal motivations, his goals, his ideology, how he will benefit, is also unsaid. Instead he insists that reality is rendered simpler than it is, to fit his project’s requirements yes, but moreso to fit his own worldview too. In the words of Lily in one episode, the Devs project, the deterministic simulation of all things is “the tech nerd’s wettest dream. The one that reduces everything to nothing”.

Turkle, S. (1984). The second self: computers and the human spirit. New York: Simon and Schuster.

Golumbia, D. (2009). The cultural logic of computation. Cambridge, Mass: Harvard University Press.

I’m not necessarily convinced of the notion that working with computing causes this viewpoint, but I think it is reasonable to think that computing may attract those with a pre-existing attraction to an orderly and systematic universe.

Malazita, James W., and Korryn Resetar. 2019. ‘Infrastructures of Abstraction: How Computer Science Education Produces Anti-Political Subjects’. Digital Creativity 30(4): 300–312. doi:10.1080/14626268.2019.1682616.

Golumbia (2009) provides a good discussion of Leibniz’s role in computational thinking.

O’Neill, O. (1996). Towards Justice and Virtue: A Constructive Account of Practical Reasoning. Cambridge: Cambridge University Press.

Callahan, Gene. 2013. ‘Liberty versus Libertarianism’. Politics, Philosophy & Economics 12(1): 48–67. doi:10.1177/1470594X11433739. page.53

Thiel, P. (2009, April 13). The Education of a Libertarian. Cato Unbound. Retrieved July 5, 2024, from https://www.cato-unbound.org/2009/04/13/peter-thiel/education-libertarian

Duran, Gil. 2024. ‘The Tech Baron Seeking to Purge San Francisco of “Blues”’. The New Republic. https://newrepublic.com/article/180487/balaji-srinivasan-network-state-plutocrat (June 10, 2024).